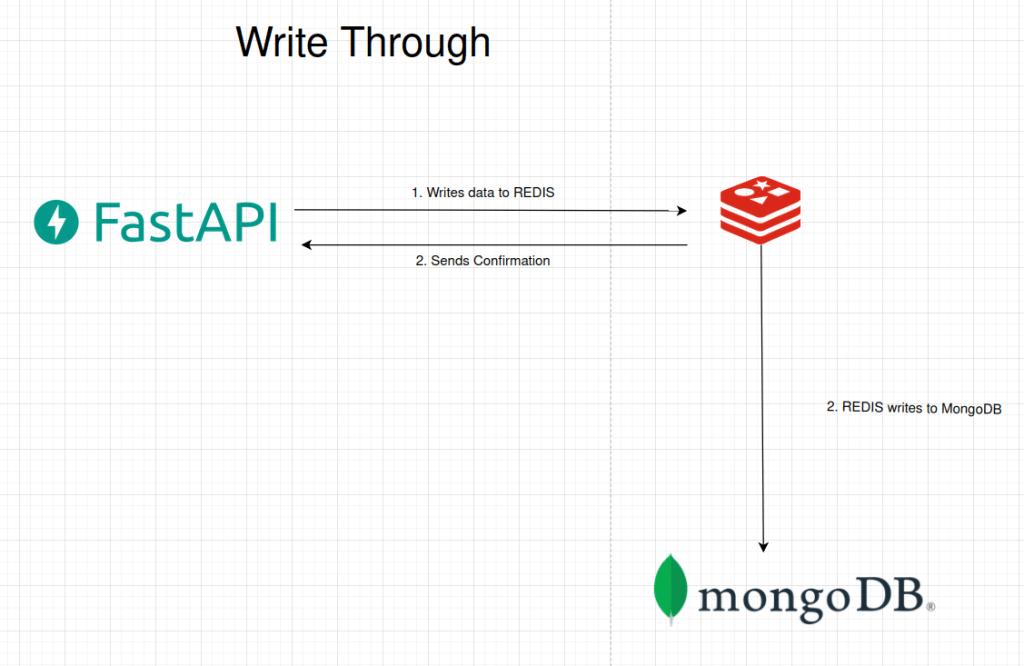

Its an Application level caching, where the application writes the data directly to the redis; and redis will write to the primary db (mongodb in our example).

Steps:

- The Application (Fast API), writes the data to the redis.

- Redis will write the data to mongodb.

- The application reads the data in redis, since it’s already written to redis.

Implementation

We are going to create a mock todo application. Let’s run both redis and mongo via docker-compose

# docker-compose.yml

version: '3.8'

services:

redisgears:

image: redislabs/redismod:latest

container_name: redis_gears_container

networks:

- my_network

ports:

- "6379:6379"

environment:

- MONGO_URI=mongodb://mongodb_container:27017

mongodb:

image: mongo

container_name: mongodb_container

networks:

- my_network

ports:

- "27017:27017"

command: --bind_ip_all

networks:

my_network:

driver: bridge

Run the docker-compose.yml,

docker compose up

Now we have to tell redis that whenever a data comes to it, send it to mongodb aswell.

We can do this communication via redis-gears and rgsync packages. Below is the script to tell redis to write it to mongodb. [ Env variable are taken from docker-compose file]

from rgsync import RGJSONWriteBehind, RGJSONWriteThrough

from rgsync.Connectors import MongoConnector, MongoConnection

import os

mongoUrl = os.environ.get('MONGO_URI')

connection = MongoConnection("", "", "", "", mongoUrl)

db = "test"

collection = "todos"

movieConnector = MongoConnector(connection, db, collection, 'id')

RGJSONWriteThrough(GB, keysPrefix='todos',

connector=movieConnector, name='TodoWriteThrough',

version='99.99.99')

Now we have the module to be executed when there is something written to redis. Now we have to send this piece of code inside redis. For that we can use either gears-cli tool or RG.PYEXECUTE functionality.

In this post we use RG.PYEXECUTE via node js script,

# script.js

import fs from "fs";

import { createClient } from "redis";

const redisConnectionUrl = "redis://127.0.0.1:6379";

const pythonFilePath = "app.py";

const runWriteBehindRecipe = async () => {

const requirements = ["rgsync", "pymongo==3.12.0"];

const writeBehindCode = fs.readFileSync(pythonFilePath).toString();

const client = createClient({ url: redisConnectionUrl });

await client.connect();

const params = ["RG.PYEXECUTE", writeBehindCode,

"REQUIREMENTS", ...requirements];

try {

await client.sendCommand(params);

console.log("RedisGears WriteBehind set up completed.");

}

catch (err) {

console.error("RedisGears WriteBehind setup failed !");

console.error(JSON.stringify(err, Object.getOwnPropertyNames(err), 4));

}

process.exit();

};

runWriteBehindRecipe();

Execute this,

node script.js

On running this, packages will get installed inside redis and will be ready to perform writethrough caching approach.

Now, everything is set, we can test this by writing a data to redis, and check whether the data is available in mongodb.

Note: Create a test database with todos collection in mongodb.

Now lets test it, Run the below script which inserts the data to Redis.

import redis

from redis.commands.json.path import Path

from redis.commands.json import JSON

r = redis.Redis(host='localhost', port=6379, decode_responses=True)

user = {

"id":"test_id",

"name":"some todo",

"description": "some description"

}

JSON(r).set("todos:test_id", Path.root_path(), user)

Awesome isn’t. Now we have acheived the write through cache.

Please try out the code: https://github.com/syedjaferk/redis-cache

Advantages:

- Data Consistency: Data in the cache is always consistent with the data in the main memory or disk, reducing the risk of data loss.

- Simpler Design: Error recovery and cache coherence mechanisms are simpler since the data is always in sync.

- Predictable Latency: Since writes are immediately propagated to the main storage, the system’s behavior is more predictable.

Disadvantages:

- Higher Latency for Writes: Each write operation has to wait until it is confirmed by the main memory or disk, leading to slower write performance.

- Increased Bandwidth Usage: Writing every update to both the cache and main storage can result in higher bandwidth usage.

Scenarios to Use:

- Critical Data Integrity: Applications where data integrity is crucial, such as financial systems or database management systems.

- Simpler Consistency Management: Systems where simpler consistency models are preferred over performance.

- High Read-to-Write Ratio: Workloads where reads are much more frequent than writes, reducing the performance impact of write latency.