Today, i enrolled in to a udemy course on cloud design pattern. This is the first pattern that they had in the list of patterns. In this blog i curate the notes i got from the video. Also i tried to implement the same using HA Proxy.

Gatekeeper Cloud Pattern

The Gatekeeper Design Pattern is a way to control access to resources or operations in your application. It acts as a mediator to ensure that only authorized or validated requests can proceed to the next stage of execution. Implementing this pattern locally could involve various use cases like authentication, rate limiting, or feature gating.

Analogy: Gatekeeper is like a security guard standing at our main door in day-to-day life. If it helps, we can imagine a Gatekeeper as a firewall in a typical network topography.

Things to consider while using gatekeeper pattern:

- GK should have only minimal access. (No Access keys or Tokens)

- GK should not do any application related operations. It just need to validate and filter the requests.

- Adding an extra layer for the gatekeeper can slow things down a bit because it requires extra work, like checking rules and handling network traffic.

- Also, if the gatekeeper itself stops working, it could block everything since it controls access. Single Point of Failure. To avoid this, you can set up backup gatekeepers (redundant instances) and use systems that automatically add more gatekeepers when needed (autoscaling). This way, the system stays reliable and can handle more users without problems.

Again , this is a concept. Which means we can achieve the same with different processes, like,

- Application Gateway

- API Management

- Web Application Firewall

- OAuth

- Reverse Proxies

- Custom Gatekeeper Services (Customizing AWS Lambda for this approach.)

Local Implementation

Implementing a rate limiter using haproxy. Here HAProxy will act as a gatekeeper.

Github: https://github.com/syedjaferk/gatekeeper_cloud_design_pattern_ha_proxy

HAProxy’s stick-table allows tracking client IPs and request rates. Here’s a minimal configuration,

haproxy.cfg

global

log /dev/log local0

log /dev/log local1 notice

maxconn 2000

user haproxy

group haproxy

daemon

defaults

log global

mode http

option httplog

option dontlognull

timeout connect 5000ms

timeout client 50000ms

timeout server 50000ms

frontend http_front

mode http

bind *:8090

stick-table type ipv6 size 100k expire 30s store http_req_rate(10s)

http-request track-sc0 src

http-request deny deny_status 429 if { sc_http_req_rate(0) gt 2 }

default_backend http_back

backend http_back

mode http

server app1 flask_app:5000 check

Explanation:

- Stick Table: Tracks unique client IPs and their request rates over 10 seconds.

- ACL: Checks if the request rate exceeds 5 requests per 10 seconds.

- Deny Rule: Blocks requests when the ACL condition is met.

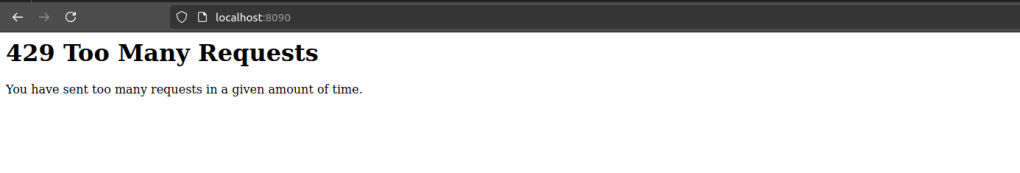

Testing Rate Limiting

Use a tool like Apache Benchmark (ab) to simulate traffic:

ab -n 20 -c 1 http://localhost:8080/

-n 20: Total number of requests.-c 1: Sends requests sequentially from a single client.

If rate limiting is configured correctly, requests exceeding the limit should be denied.

References:

- Microsoft Gatekeeper – https://learn.microsoft.com/en-us/azure/architecture/patterns/gatekeeper

- Application Gateway – https://azure.microsoft.com/en-us/services/application-gateway/

- API Management – https://azure.microsoft.com/en-ca/services/api-management/

- AWS Gatekeeper for security – https://aws.amazon.com/blogs/networking-and-content-delivery/limiting-requests-to-a-web-application-using-a-gatekeeper-solution/