Today, I learned about the circuit breaker pattern, which helps prevent cascading failures in systems. At first, I thought, “Why not just handle this using a retry pattern ?” or “If a server is down, let it just return a failure message. Why bring in an extra layer?” The answer lies in avoiding cascading failures, where one issue can snowball and bring down the entire system.

In this blog, i jot down the notes on circuit breaker for my future self and for you.

What is a Circuit Breaker?

A Circuit Breaker is a design pattern used to prevent cascading failures in a system. It monitors the interactions between services and limits the impact of failures by controlling the flow of traffic to a failing service.

Think of it like an electrical circuit breaker in your house. When there’s a power overload, the circuit breaker trips to prevent further damage. Similarly, in a software context, the circuit breaker detects failures and “trips,” halting requests to the problematic service until it recovers.

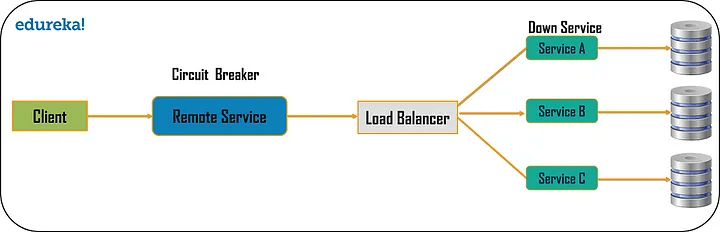

As an example, assume a consumer sends a request to get data from multiple services. But, one of the services is unavailable due to technical issues. There are mainly two issues you will face now.

- First, because the consumer will be unaware that a particular service is unavailable (failed), so the requests will be sent to that service continuously.

- The second issue is that network resources will be exhausted with low performance and user experience.

📒 You can leverage the Circuit Breaker Design Pattern to avoid such issues. The consumer will use this pattern to invoke a remote service using a proxy. This proxy will behave as a circuit barrier.

📒 When the number of failures reaches a certain threshold, the circuit breaker trips for a defined duration of time.

📒 During this timeout period, any requests to the offline server will fail. When that time period is up, the circuit breaker will allow a limited number of tests to pass, and if those requests are successful, the circuit breaker will return to normal operation. If there is a failure, the time out period will start again.

Some scenarios which break the services

📒 Assume that you have 5 different services and you have a webserver to call these services. Now when a request is received, the server allocates one thread to call the service.

📒 Now what happens is that this service is a little delayed (due to a failure) and this thread is waiting. One thread waiting would be fine.

📒 But if this service is a high demand service (gets more and more requests), more threads will be allocated for this service and all the threads allocated to call the service will have to wait.

📒 So, if you had 100 threads now 98 of them could be occupied and if the other two threads are occupied by another 2 services all the threads are now blocked.

📒 Now what happens is the remaining requests the reach your service will be queued (blocked). So meanwhile 50 more requests also came and all of them were queued because threads were feezed.

📒 Then a few seconds later the failed service recovers back. Now the webserver tries to process all the requests in the queue. As a result, the webserver (or the proxy) may never recover. The reason is when the webserver processes queue request more and more requests reach continuously. So, this type of scenario will kill your service.

Key States of a Circuit Breaker

- Closed:

- All requests are allowed to pass through.

- The system monitors the success and failure rates.

- If failures exceed a predefined threshold, the circuit trips to the Open state.

- Open:

- Requests are blocked immediately, and the failure is returned.

- This state protects the system from further strain.

- A timer is set to periodically check if the service has recovered.

- Half-Open:

- A limited number of requests are allowed to pass through.

- If they succeed, the circuit transitions back to Closed.

- If they fail, the circuit returns to Open.

📒 The circuit breaker works (pass requests through the service) normally when it is in the “closed” state. But when the failures exceed the threshold limit, the circuit breaker trips. As seen in the above diagram, this “opens” the circuit (state switches to “open”).

📒 When the circuit is “open” incoming requests will return with error without any attempt to execute the real operation

📒 After a duration of time, the circuit breaker goes into the “half-open” state. In this state, the circuit breaker will allow a limited number of test requests to pass through and if the requests succeed, the circuit breaker resets and returns to the “closed” state and the traffic will go through as usual. If this request fails, the circuit breaker returns to the open state until another timeout.

So how is it going to connect back?

📒 In the background, it sends a ping request or a default request to service A in a timely manner. So, when the response time comes back to the normal threshold it will turn on the request again.

📒 So, the next requests that are reaching to consume service A will directly go to service A

During the failure time all the requests that come to consume service A are sent back with an error message. So now there is no queue. When the service back online it will be open for new traffic.

But didn’t we fail to respond to some of the consumer requests? 🙄

📒 Yes, some requests were (were not queued) sent back to the consumer with an error message and they didn’t get a response from service A.

📒 But, what would have been the result if those requests try to reach service A (which failed) and those requests were queued?

📒 The whole system could have failed due to the huge queue created behind the service. Because even if the service recovers back those queues will consume service A and eventually service A will fail.

📒 But with this approach service, A might fail for some time duration and certain requests may not get a response from service A and will be returned with a error. But as soon as service A comes back online, the next coming traffic will be served. So a casacade faliure will not occur.

📒 That’s the principle behind Circuit Breaker Pattern.